AI and the Technological Singularity

What is the technological singularity, and why we should care about it?

The technological singularity marks the point beyond prediction. The theory holds that technology / machines will become so advanced that they will gain an intelligence of their own. This intelligence will duplicate (independent of humans) and compound to the point of super-intelligence, or an intelligence greater than that of a human being. Intelligence is subjective, but my definition involves an entity defining its own goals / objectives and then making decisions in an effort to achieve those goals / objectives. Should technology reach this state of being, the vast possibilities of outcomes are so great and dramatically different from one another that no quantifiable predictions can be made as to how this event will impact the world or human beings themselves.

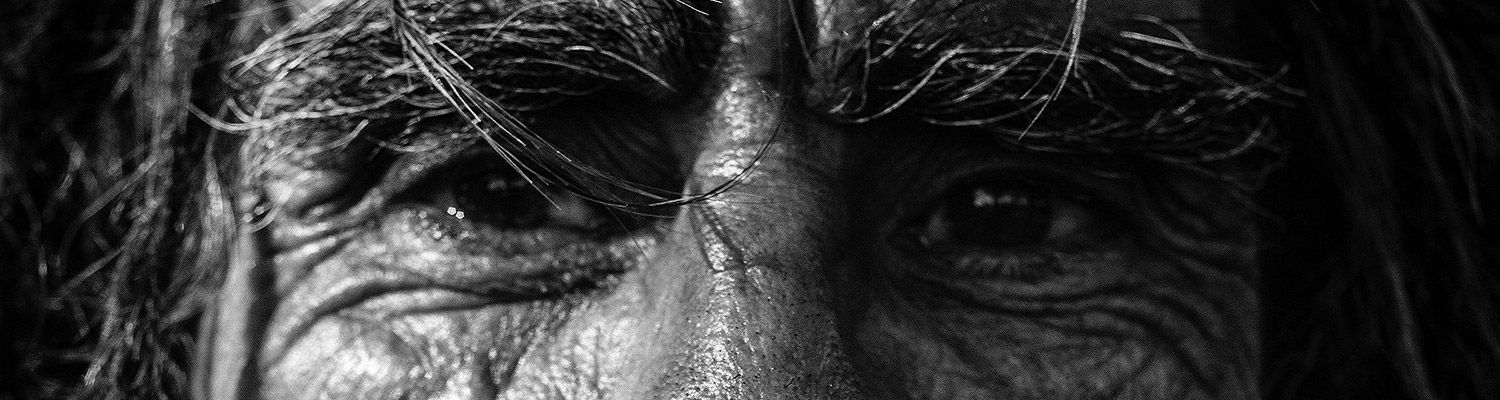

I suppose “concern” is natural since the unknown has always lead to thoughts of fear and opens the door potential worst-case-scenarios. However, the singularity is something that is uncontrollable—and if it’s technologically possible—is unavoidable. It’s like worrying about the weather. But, unlike the weather, no experts can predict if the singularity will bring rain or shine. For some reason, I’ve always imagined the singularity as a fusion of man and machine, rather than a division. Evolution of the species. Progress with lots of unintended consequences. A human brain integrated with bionic extremities and a wifi connection—the fusion allowing the brain to integrate with weak-AI systems to perform outstanding computing and learning capabilities; access to the internet with vast knowledge / databases associated with it. At what point does a human become a machine, or a machine a human?

Strong AI within an independent entity is a separate concern. The only thing that separates us from all other species on the planet is our superior intelligence. This intelligence allows us to dominate other species, control portions of our environment, and aid and advance our survival. Should another entity come along that surpasses us as the leading intelligence on the planet—our destiny, effectively, would then fall into their hands. Pragmatically speaking, there’s only two conditions that would ensure humanity’s survival. One, we possess no existential threat to the new technological super-intelligence. They’re so beyond us, or different from us, that they don’t need to worry about us. Two, we provide some sort of use or function for them. They need us for something. Failure to meet one of these two conditions would create competition for resources and dominance. If an equilibrium within a new ecosystem can’t be established, one side may decided to push the other out—odds favoring the side with superior intelligence.